Part 1 - Building a TestDsl

Tests should react to behavioral changes but be insensitive to structural changes. We call tests that do not fulfill the second condition structure-cementing. They make it difficult or even impossible to change the structure and architecture. Teams to whom this happens test and improve less code.

With the right approach and a TestDsl, however, we can completely avoid cementation and write tests that turn from integration tests to unit tests after changing just one line of test code.

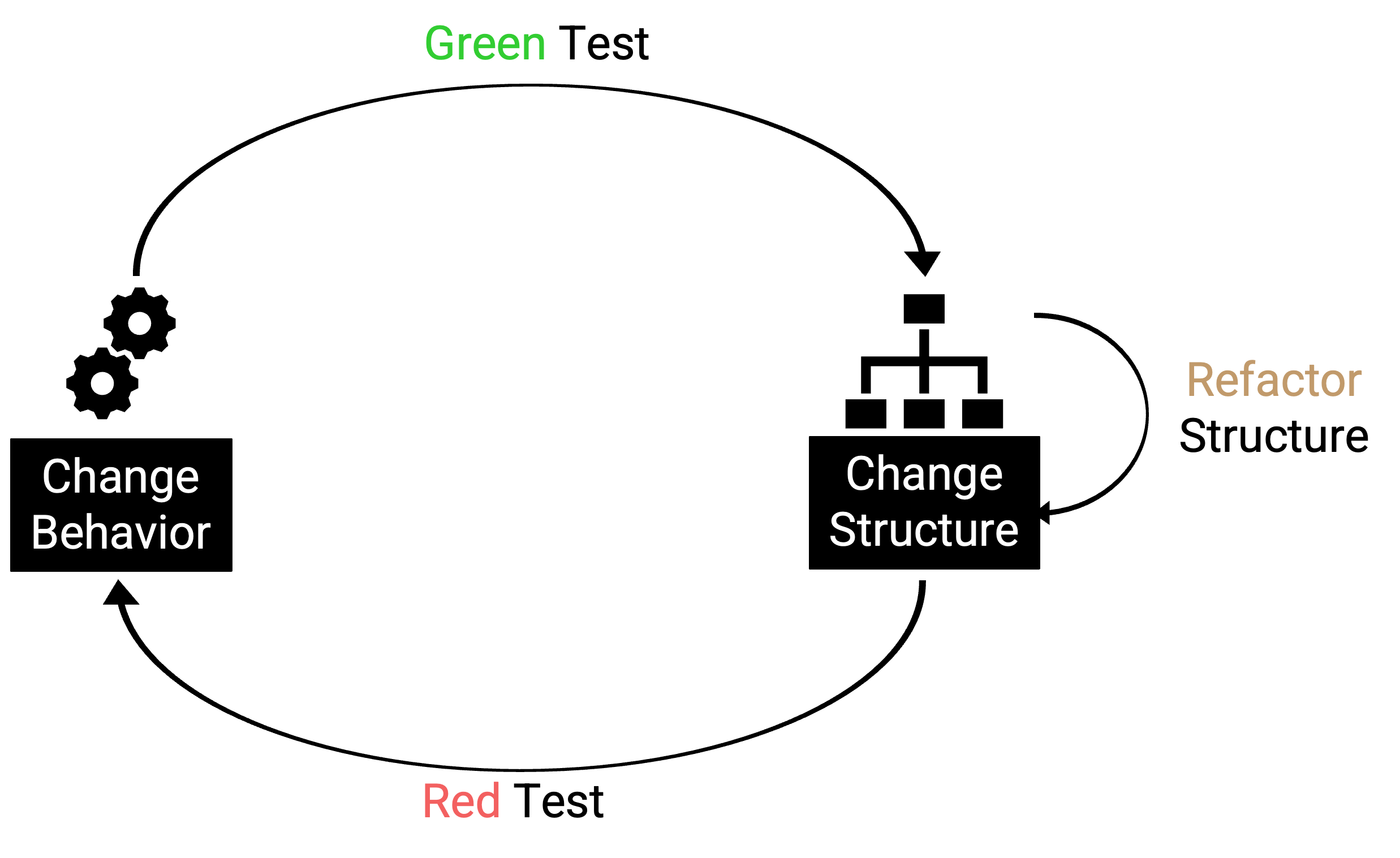

The two modes of development

If we change behavior, we should have to change tests. When we restructure code (add, rename or remove methods, classes, parameters or fields) we should not have to change the tests. Both conditions are essential because agile developers are constantly jumping back and forth between the two modes change behavior and change structure.

A failing test forces us to jump to the change behavior mode to get it green again. When we get the test green we jump back to change structure mode. The green test now provides the safety net we need to change structure safely. Any time we change the structure (aka refactor) the tests tell us if we did it without changing behavior (see figure). The same applies if we write our tests after the implementation, then the transition between the modes is just not quite as pronounced.

Changing behavior is of course very risky, because a desired change in behavior can also bring with it an undesirable one. So most of the time we want to spend in change structure mode and use techniques like preparatory refactoring [1] that make our behavior change as simple and manageable as possible.

However, this also requires tests that allow structural changes and do not cement them. Such tests allow us to proceed in very small and manageable steps. A difficult change is first prepared with 10 to 20 “r” (refactor) commits in order to then make a very small “F” (feature) commit. The commit format is Arlo’s Commit Notation [2], because this models the risk of the commit.

When faced with a hard change, first make it easy (warning, this may be hard), then make the easy change.

However, if the structure is cemented, then not only are structural but also feature-related behavioral changes more difficult. This is because we no longer build features where they make sense, but where they are easier to integrate. As a result, the code becomes increasingly confusing. At the beginning, you can no longer find code, it ends up in the simplest place, not the most sensible one. Later on, there are more and more unexpected dependencies, more and more unknown unknowns. We change one place and this leads to a behavior change in a completely different place.

The structure-cementing tests have destroyed maintainability. Or we have deleted them and caught regressions.

Tests are structure-cementing in two ways:

-

through redundancy: tests use the same structure in several places. The more often the same structure (class, method) is required and the more excessively mocked, the more the design is cemented.

-

by testing at the wrong level: we test unstable elements and not modules.

In part 1 of this article series, we look at how we can avoid structure-cementation through redundancy with a TestDsl, in part 2 we explain the concepts of the Dsl in more detail and part 3 deals with the correct test levels.

The initial test

We can see the cementation through redundancy in Initial test.

@Test

void should_be_able_to_rent_book(){

// given

var book = new Book(bookId("b"), "Refactoring", "Martin Fowler");  var permission = new Permission(permissionId("p"), CAN_RENT_BOOK);

var role = new Role(roleId("r"), "Renter", permission.id);

var user = new User(userId("u", "Alex Mack", role.id));

var books = new InMemoryBooksDouble();

var permission = new Permission(permissionId("p"), CAN_RENT_BOOK);

var role = new Role(roleId("r"), "Renter", permission.id);

var user = new User(userId("u", "Alex Mack", role.id));

var books = new InMemoryBooksDouble();  var permissions = new InMemoryPermissionsDouble();

var roles = new InMemoryRolesDouble();

var users = new InMemoryUsersDouble();

books.add(book);

var permissions = new InMemoryPermissionsDouble();

var roles = new InMemoryRolesDouble();

var users = new InMemoryUsersDouble();

books.add(book);  permissions.add(permission);

roles.add(role);

users.add(user);

var testee = new RentingService(new ClockDouble(), books, permissions, roles, users /* ... */);

permissions.add(permission);

roles.add(role);

users.add(user);

var testee = new RentingService(new ClockDouble(), books, permissions, roles, users /* ... */);  // when

var result = testee.rentBook(book, user);

// when

var result = testee.rentBook(book, user);  // then

assertThat(result.isRented()).isTrue();

}

// then

assertThat(result.isRented()).isTrue();

}| The basis for most of our tests are, in this example, books. Therefore, most of our tests instantiate at least one book and with each redundant instantiation we cement the structure of book more and more, since a change would result in adjustments in hundreds of tests. | |

The repositories follow the naming convention +{implementation}+{name of managed entity with plural s}Double. The Double postfix indicates that this is a test double [4] located in src/testFixtures (or src/test). The postfix is not necessary, but it makes finding all created doubles very convenient. |

|

| Our code to be tested needs to load the books from the repository, so they must also be stored there first. How this is done is actually an irrelevant implementation detail for the test. However, redundancy in several tests cements this. It also makes the test unnecessarily long. | |

The more often we instantiate the RentingService, the less interest we have in changing its dependencies. The dependencies of our service are cemented. |

|

| The method whose behavior we actually want to test. Cementing the structure of this method with multiple tests is a tradeoff we want to make because this time we get something back: Feedback. Feedback on whether the method is pleasant and logical to use. |

In addition to the test smell “structure-cementing”, this test has a few other problems:

-

long test: there is a lot to read and therefore many potentially hidden errors.

-

irrelevant details: Does it have to be a specific book or is any book possible? Do the fields have to have the specified values for the test to be successful?

We will therefore refactor the test in several steps and work our way towards the final TestDsl [Complete test with TestDsl Extension].

1. Refactoring - Factory Methods

We can break the redundancy and focus tests by no longer instantiating objects directly, but using factory methods [Factory Methods].

@Test

void should_be_able_to_rent_book(){

// given

var book = makeBook();

var permission = makePermission(CAN_RENT_BOOK);

var role = makeRole(permission);

var user = makeUser(role);

var books = new InMemoryBooksDouble();

// remaining Test

// ...

}

static Book makeBook(){

return new Book(bookId("b"), "Refactoring", "Martin Fowler");

}For this small example this is fine, but we quickly run into many problems with this approach (which is also known as the object-mother [5] pattern):

-

either each new use case gets a new method (

makeBook(),makeExpensiveBook()etc.). -

or the method gets dozens of optional parameters without it being clear which parameters are dependent on each other.

This does not mean that factory methods should not be used. Especially when introducing new structures, factory methods are great because we can create them directly in the test class where we need them. However, if we are more sure about our structure, we should first use the Builder from the next section [Builder Methods] within the factory method and then inline it with our refactoring tools.

2. Refactoring - Simple Builder

Instead of the factory method or the object-mother pattern, we prefer to use a builder [Builder Methods].

@Test

void should_be_able_to_rent_book(){

// given

var book = new BookBuilder().build();

var permission = new PermissionBuilder().withPermission(CAN_RENT_BOOK).build();

var role = new RoleBuilder().withPermissions(permission).build();

var user = new UserBuilder().withRole(role).build();

var books = new InMemoryBooksDouble();

// remaining Test

// ...

}If you call the build() method directly, the entity is assigned default values. With the withX() methods, we can adapt the default values to our specific test if necessary. We are therefore much more flexible than with the Factories/Object Mother pattern, because not every case needs its own method.

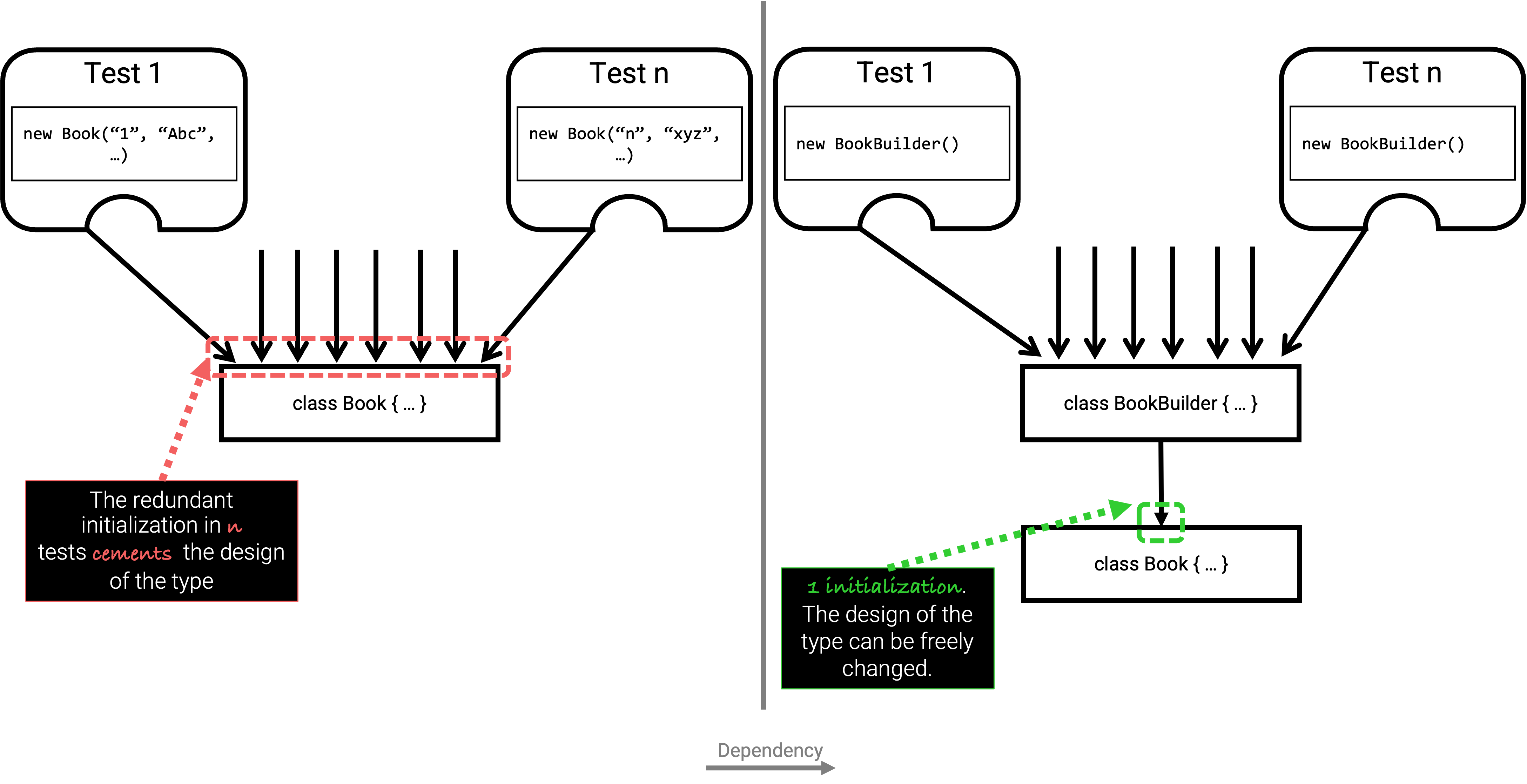

With the builder, we have also redirected the redundant dependencies to a test-specific abstraction [Cementing structure by init]. We now only have to make changes to the structure of the entity in the builder, not in n tests. We can maintain the structural changes in the builder because we are protected by the tests that already use the builder. If existing tests become red, we have broken something.

In addition to flexible test setup and avoiding the cementing of structure, such a Builder offers us a few more advantages:

-

the test no longer mentions irrelevant details. The above test shows us that it needs any book and not a specific one.

-

the builder highlights essential differences between the tests. By using the

with()method, we see that the user in the test absolutely needs theCAN_RENT_BOOKpermission. -

in the builder we have a unique place to store technically meaningful default values [Entity-TestBuilder]. Practical documentation for developers.

public class BookBuilder extends TestBuilder<Book> {

public BookId id = ids.next(BookId.class);

public String title = "Refactoring";  public String author = "Martin Fowler";

public Instant createdOn = clock.now();

public BookBuilder(Clock clock, Ids ids){

public String author = "Martin Fowler";

public Instant createdOn = clock.now();

public BookBuilder(Clock clock, Ids ids){  super(clock, ids);

}

public BookBuilder(){

super(clock, ids);

}

public BookBuilder(){  this(globalTestClock, globalTestIds);

}

public Book build(){

return new Book(id, title);

}

public BookBuilder with(Consumer<? super BookBuilder> action) {

this(globalTestClock, globalTestIds);

}

public Book build(){

return new Book(id, title);

}

public BookBuilder with(Consumer<? super BookBuilder> action) {  action.accept(this);

return this;

}

action.accept(this);

return this;

}

}

}| Useful defaults that are representative for the production are stored here. | |

| We already design the builder so we can enter the two main sources of non-deterministic tests (time and random values) from outside. | |

| With the TestDsl refactoring, this parameterless constructor is omitted. | |

The with() method speeds up the writing of the initial builder. However, you then have to get used to the fact that the builder has public fields. This is a trade-off that can be made for tests. The specific withX() are more flexible because they can be overloaded though. |

|

As an alternative to the generic with(), you can introduce field-specific withX() methods below. |

However, we are not finished yet, because the combination of permission, role and user can be modeled even more strongly and the test can be further focused.

3. Refactoring - Combo Builder

We introduce the concept of the Combo Builder [Using the ComboBuilder] so that we can build several separately stored objects in a coordinated manner.

@Test

void should_be_able_to_rent_book(){

// given

var book = new BookBuilder().build();  var userCombo = new UserComboBuilder.with(it ->

it.hasPermissions(CAN_RENT_BOOK)

).build();

// Combo includes:

// var permissions = userCombo.permissions();

// var role = userCombo.role();

// var user = userCombo.user();

var books = new InMemoryBooksDouble();

// remaining Test

// ...

}

var userCombo = new UserComboBuilder.with(it ->

it.hasPermissions(CAN_RENT_BOOK)

).build();

// Combo includes:

// var permissions = userCombo.permissions();

// var role = userCombo.role();

// var user = userCombo.user();

var books = new InMemoryBooksDouble();

// remaining Test

// ...

}To keep the complexity of the combo builder low, it only ever builds standard cases [Entity-ComboBuilder]. For more difficult and atypical situations, e.g. if a user has several roles, the individual builders of permission, role and user are used again. This is important because all the special cases will create a lot of unmaintainable code. The rule of thumb is that a builder should never contain if or switch.

public class UserComboBuilder implements TestBuilder<UserCombo> {

// combination fields

private List<Permission> permissions = Collections.emptyList();

public UserCombo build(){

var role = new RoleBuilder().withPermissions(permissions).build();

var user = new UserBuilder().withRole(role).build();

return new UserCombo(user, role, permissions);

}

public UserBundleBuilder hasPermissions(PermissionCode... permissionCode) {

this.permissions = Stream.of(permissionCode)

.map(code -> new Permission(code))

.toList();

return this;

}

}Using the builder has already streamlined the test considerably. However, we still have the implementation detail of the repositories. We still need to store created entities in repositories and the test needs to know how to do this.

4. Refactoring - TestDsl

First we introduce the TestState.

private TestState a;  @Test

void should_be_able_to_rent_book(){

// given

var book = a.book();

@Test

void should_be_able_to_rent_book(){

// given

var book = a.book();  var userCombo = a.userCombo(it -> it.hasPermission(CAN_RENT_BOOK));

var books = new InMemoryBooksDouble();

var permissions = new InMemoryPermissionsDouble();

var roles = new InMemoryRolesDouble();

var users = new InMemoryUsersDouble();

books.add(book);

permissions.addAll(userCombo.permissions());

roles.add(userCombo.role());

users.add(userCombo.user());

var testee = new RentingService(new ClockDouble(), books, permissions, roles, users /* ... */);

// WHEN + THEN

// ...

}

var userCombo = a.userCombo(it -> it.hasPermission(CAN_RENT_BOOK));

var books = new InMemoryBooksDouble();

var permissions = new InMemoryPermissionsDouble();

var roles = new InMemoryRolesDouble();

var users = new InMemoryUsersDouble();

books.add(book);

permissions.addAll(userCombo.permissions());

roles.add(userCombo.role());

users.add(userCombo.user());

var testee = new RentingService(new ClockDouble(), books, permissions, roles, users /* ... */);

// WHEN + THEN

// ...

}| The TestState is a class that knows all builders. | |

| Build tasks are always delegated to the already written builders. |

At first glance, we only gain some compactness: xyzBuilder() no longer needs to be instantiated and we don’t need a .build() method. Behind the scenes, however, we have gained much more. The TestState is now a central point that recognizes all created entities. We can therefore ask the state to store all created entities in the repositories and streamline our test even further [Saving state to the floor].

private TestState a;

private Floor floor; // contains the floor that the application is build on

@Test

void should_be_able_to_rent_book(){

// given

var book = a.book();

var userCombo = a.userCombo(it -> it.hasPermission(CAN_RENT_BOOK));

a.saveTo(floor);

var testee = new RentingService(floor);

var testee = new RentingService(floor);  // WHEN + THEN

// ...

}

// WHEN + THEN

// ...

}With this call, we save book, permission, role and user in the respective repositories. Theoretically, the call to a.book(); could already have saved the book in the BookRepository. However, the saveTo() makes saving more explicit and also offers the flexibility to create entities that do not automatically end up in repositories. |

|

We group all Ports into the outside world in the so-called Floor, the floor on which our application stands. A repository is such a Port, just like Clock or an external Client. The Floor allows us to flexibly control how our testee communicates with the outside world in tests. We can pull the floor out from under the feet of our application in tests and set up a much more testable floor. In the ports & adapters architecture [6], the floor is synonymous with the driven but not the driving ports. Since it is easy to overlook whether something is driven or driving, the terms were out of the question. Floor was chosen as an identifier because it is short and thus provides an analogy for software Floor, the Forest Canopy and the forest. Alternative names for driven (=outcomes) or driving (=triggers) Port were not known at the time. |

|

We made the Floor part of our production code. To instantiate service classes, you only ever need the Floor [RentingServices extracts only required dependencies] and no longer have to write the concrete dependencies. |

To simplify the dependency management we pass floor directly to the constructor of our production services [RentingServices extracts only required dependencies]. We don’t have to do this to utilize the TestDsl. Alternatively, we could have left the constructor of the service as it is and written a configureRentingService(floor) method for tests that assigns dependencies from the Floor. Both ways avoid the structure cementation of the RentingService. If we were to use an DI-Container like Spring to instantiate the service, we would have the same advantage. However, many of these containers make tests slower due to their startup overhead and make test parallelization more difficult due to context caching, which is why they are not a good choice for unit tests. In general we should write unit tests without such containers. This recommendation is also shared by the Spring Framework [7].

public class RentingService {

private final Clock clock;

private final Books books;

// etc.

public RentingService(Floor floor) {

this.clock = floor.clock();

this.books = floor.books();

// etc.

}

}To ensure that the tests are isolated from each other, we instantiate TestState and Floor for each test [Instantiate TestDsl in BeforeEach].

private TestState a;

private Floor floor;

@BeforeEach

void init(){

var dsl = TestDsl.of(unitFloor());

a = dsl.testState();

floor = dsl.floor();

}The floor itself is simply an interface that recognizes all dependencies [Floor of the TestDsl]. The unit test implementation unitFloor() then returns InMemoryDoubles when the methods are called.

public interface Floor {

Clock clock();

Books books();

// etc.

}The sum of these changes is that our test looks very compact [Complete test with TestDsl].

private TestState a;

private Floor floor;

@BeforeEach

void init(){

var dsl = TestDsl.of(unitFloor());

a = dsl.testState();

floor = dsl.floor();

}

@Test

void should_be_able_to_rent_book(){

// given

var book = a.book();

var userCombo = a.userCombo(it -> it.hasPermission(CAN_RENT_BOOK));

a.saveTo(floor);

var testee = new RentingService(floor);

// WHEN

var result = testee.rentBook(book, userCombo.user());

// THEN

assertThat(result.isRented()).isTrue();

}We have already come a long way with this refactoring:

-

we were able to map the setup for our test in just 4 lines.

-

we were able to write the entire setup in the same place as our test. You can see at a glance which preconditions the test requires and you don’t have to scroll or open another file to understand the context.

-

we were able to hide irrelevant details (any

bookand anyuserwill do) and highlight relevant ones (theuserneeds theCAN_RENT_BOOKpermission). -

we have a standardized way to do the test setup for all tests.

-

we were able to avoid a structure-cementing test setup.

However, we can still make one improvement.

5. Refactoring - Extension

So far we have to write redundant initialization code for the TestDsl in the @BeforeEach block in every test. If we are using JUnit5, we can make it reusable for multiple tests with an annotation [Complete test with TestDsl Extension].

@Unit @Test  void should_be_able_to_rent_book(TestState a, Floor floor){

void should_be_able_to_rent_book(TestState a, Floor floor){  // given

var book = a.book();

var userCombo = a.userCombo(it -> it.hasPermission("CAN_RENT_BOOK"));

a.saveTo(floor);

var testee = new RentingService(floor);

// WHEN

var result = testee.rentBook(book, userCombo.user());

// THEN

assertThat(result.isRented()).isTrue();

}

// given

var book = a.book();

var userCombo = a.userCombo(it -> it.hasPermission("CAN_RENT_BOOK"));

a.saveTo(floor);

var testee = new RentingService(floor);

// WHEN

var result = testee.rentBook(book, userCombo.user());

// THEN

assertThat(result.isRented()).isTrue();

}Our @BeforeEach is completely merged into the annotation @Unit. |

|

| The annotation turns the two parts of our Dsl into parameters of the test. |

The new annotation registers a JUnit 5 extension [8]. Such an extension can react to the test LifeCycle by implementing special interfaces. We are only interested in ParameterResolver because it resolves the parameters TestState and Floor [resolveParameter() of the TestDsl extension] that our test requires.

@Target({ ElementType.METHOD })

@Retention(RetentionPolicy.RUNTIME)

@org.junit.jupiter.api.extension.ExtendWith(UnitTestExtension.class)  public @interface Unit { }

public @interface Unit { }  class UnitTestExtension implements ParameterResolver {

@Override

public Object resolveParameter(

ParameterContext parameterContext,

ExtensionContext extensionContext

) throws ParameterResolutionException {

var storeNamespace = Namespace.create(

getClass(), context.getRequiredTestMethod());

var store = extensionContext.getStore(store);

class UnitTestExtension implements ParameterResolver {

@Override

public Object resolveParameter(

ParameterContext parameterContext,

ExtensionContext extensionContext

) throws ParameterResolutionException {

var storeNamespace = Namespace.create(

getClass(), context.getRequiredTestMethod());

var store = extensionContext.getStore(store);  var dsl = store.getOrComputeIfAbsent(

"UNIT_TEST_DSL",

(key) -> testDslOf(unitFloor()),

var dsl = store.getOrComputeIfAbsent(

"UNIT_TEST_DSL",

(key) -> testDslOf(unitFloor()),  UnitTestDsl.class

);

var parameterType = parameterContext.getParameter().getType();

UnitTestDsl.class

);

var parameterType = parameterContext.getParameter().getType();  if (parameterType.equals(TestState.class))

return dsl.testState();

else if (parameterType.equals(Floor.class))

return dsl.floor();

else

throw new ParameterResolutionException("...");

}

// ...

}

if (parameterType.equals(TestState.class))

return dsl.testState();

else if (parameterType.equals(Floor.class))

return dsl.floor();

else

throw new ParameterResolutionException("...");

}

// ...

}With @ExtendWith we connect annotation with the extension code. |

|

| A normal Java annotation. The name is freely selectable. | |

| Extensions must always save state in a store. This is unique per namespace. | |

This creator function is used if no Dsl has yet been created for the test. The resolveParameter() method is called exactly twice per test. Once for the TestState and once for the Floor. We use getOrComputeIfAbsent() so that the same instance of the Dsl is returned. |

|

| We use the parameterType to recognize what is to be returned. |

In addition to the UnitTest extension shown here, we can of course write another extension, the IntegrationTestExtension. This looks the same, but uses (key) → testDslOf(integrationFloor()) as the creator function. The TestState remains the same but the implementation of the Floor is an IntegrationFloor which does not contain InMemoryDoubles but Jpa repositories.

Since the TestState only knows the Floor interface and not the concrete implementation, we can now make any test by changing a single annotation from an @Integration to an @Unit test. This property of the TestDsl is particularly helpful for legacy code, because this code often contains a lot of domain logic in the database.

In legacy code you can start by writing integration tests to catch regressions. Once you have pulled the domain logic out of the database and into the application code, you can convert the tests you wrote at the start into unit tests by just changing the annotation. Without a TestDsl, you would have to completely rewrite them at unit level, which is why many teams do not do this, remain stuck with slow integration tests and cannot iterate much faster despite increasing test coverage.

Alternatives

Testing without mocks” [9] follows a similar approach to TestDsl. With this approach, however, you have to modify your production code more because we place special test doubles, the so-called “nullables” [10], directly in the production code.

The refactoring tools of our IDE can also prevent certain forms of structure-cementation from our initial test [Initial test]. “Change Signature” is the most helpful refactoring against structure cementing. It works great when you need to remove constructor parameters. Adding them, however, is only useful if the default parameter inserted in tests are very simple and have no dependency on other state in the test. These refactoring tools can also create bugs, when default values are not only set in the test code, but also in the production code, and you forget to change them. It is clear that the Dsl-Builder are more flexible than the change signature refactoring and are better at preventing entity cementation. The same applies to the TestState which allows more flexible customization of the Ports. Refactoring tools are therefore not a replacement, but a supplement for the TestDsl.

The refactoring framework Open Rewrite [11] looks promising, but seems to be designed more for framework migrations. It should therefore also be more of a supplement to TestDsl, which focuses on domain logic.

Interim conclusion

With the TestDsl we can make our test setup:

-

standardized for all tests,

-

complete (nothing needs to be outsourced),

-

compact (although nothing has been outsourced),

-

free of irrelevant details,

-

with relevant details highlighted,

-

readable,

-

low-maintenance,

-

parallelizable,

-

fast,

-

and free of structure cementation

The TestDsl is of course not free. But it is not expensive either. We have to create a foundation with Extension, TestState, Floor and BaseInMemoryDouble. Experience from several JVM and Node projects shows that the maintenance effort is low once this foundation has been laid.

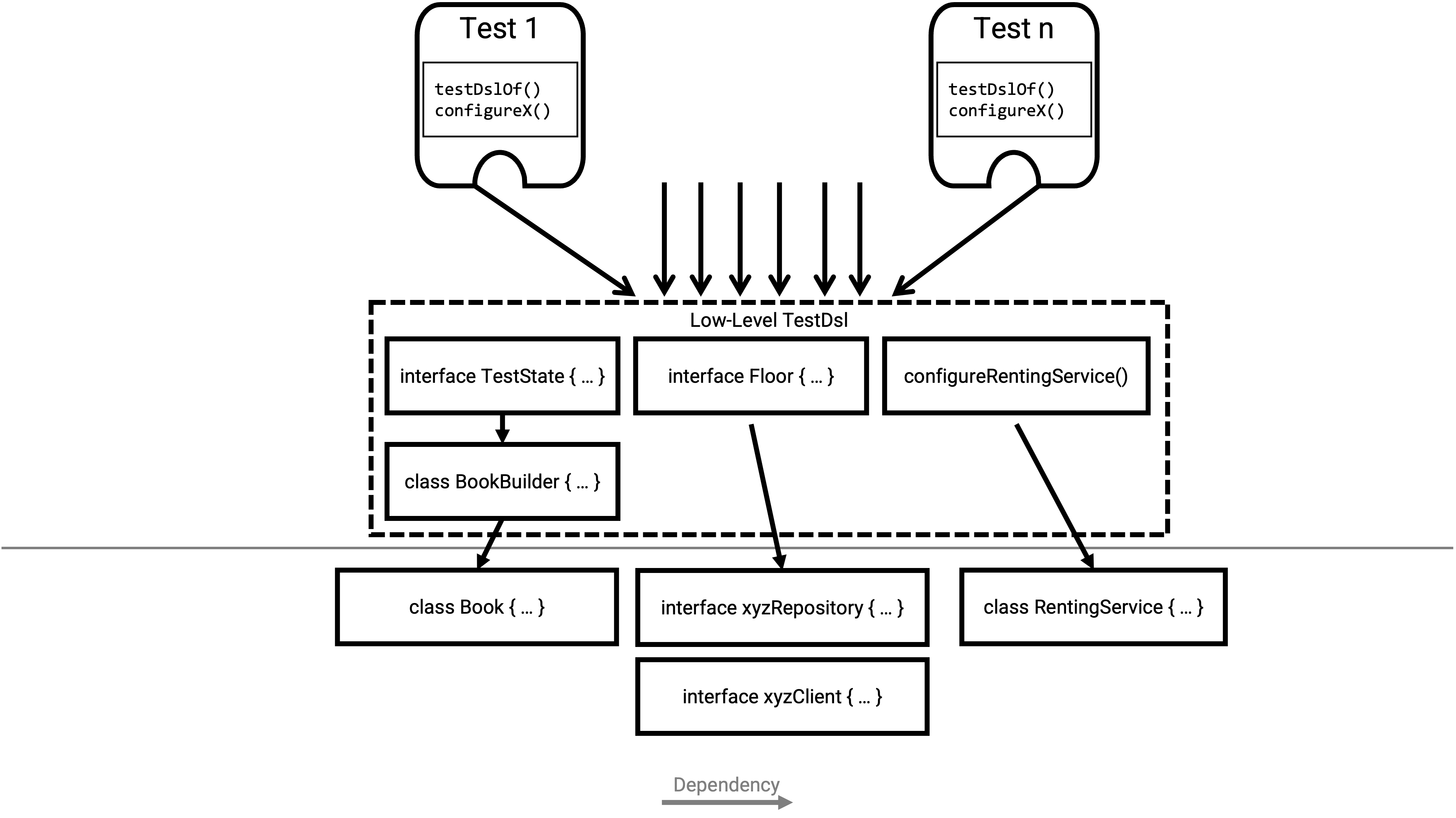

It is rather rare that you have to create new entities. You work much more with existing entities and services and restructure them. The initial investment then pays dividends continuously. Since the entire setup is done via the Dsl [see figure], only the Dsl is affected by structural changes.

The figure also shows what the actual trade-off is that we make with the Dsl: Loss of feedback on our setup. Without Dsl, you notice whether the setup is “annoying” when writing tests. If you have to write a lot of code for testing, you naturally ask yourself whether there is an easier way to do it. There is a natural pressure to improve the structure. This “annoying” setup can now be hidden in the Dsl. It is therefore all the more important to use the Dsl only for the setup, to leave it as dumb as possible, to define as few combo builders as possible and to keep thinking about whether the structure is on the right track when adapting the Dsl.

Outlook

In this part we have seen how to solve structure cementation through redundancy with the TestDsl.

In the part 2, we will take a closer look at the concepts on which the TestDsl is based. We will go into the design of the builder, how to combine the DSL with @SpringBootTest, what the difference between a high- and a low-level TestDsl is, how to keep test doubles synchronized with the production code and why the excessive use of mocking frameworks also leads to structure cementation.

In part 3, we will see how to prevent the other kind structure cementation: testing unstable elements instead of modules.

If you want even more information on the topic, you can view the TestDsl example code on Github [12] or watch the presentation on “Beehive Architecture” [13] (in 🇩🇪), which is also about the TestDsl.

|

|

This article was originally published in Java Aktuell 4/24 in 🇩🇪. It is translated and republished here with the magazine’s permission. |

References

-

[1] M. Fowler, “An example of preparatory refactoring.” 2015. Available: https://martinfowler.com/articles/preparatory-refactoring-example.html

-

[2] A. Belshee, “Arlo’s Commit Notation.” 2018. Available: https://github.com/RefactoringCombos/ArlosCommitNotation

-

[3] K. Beck, “Mastering Programming.” Available: https://tidyfirst.substack.com/p/mastering-programming

-

[4] G. Meszaros, “Test Double.” 2011. Available: http://xunitpatterns.com/Test%20Double.html

-

[5] M. Fowler, “Object Mother.” 2006. Available: https://martinfowler.com/bliki/ObjectMother.html

-

[6] A. Cockburn, “Hexagonal architecture.” 2005. Available: https://alistair.cockburn.us/hexagonal-architecture/

-

[7] T. Spring, “Unit Testing.” 2006. Available: https://docs.spring.io/spring-framework/docs/2.0.4/reference/testing.html#unit-testing

-

[8] T. JUnit5, “JUnit 5 User Guide - Extension Model.” Available: https://junit.org/junit5/docs/current/user-guide/#extensions

-

[9] J. Shore, “Testing Without Mocks: A Pattern Language.” 2023. Available: https://www.jamesshore.com/v2/projects/nullables/testing-without-mocks

-

[10] J. Shore, “Nullables.” 2023. Available: https://www.jamesshore.com/v2/projects/nullables/testing-without-mocks#nullables

-

[11] T. Moderne, “Large-scale automated source code refactoring.” 2024. Available: https://docs.openrewrite.org/

-

[12] R. Gross, “TestDsl (Avoid structure-cementing Tests).” 2024. Available: https://github.com/Richargh/testdsl

-

[13] R. Gross, “Beehive Architecture 🇩🇪” 2023. Available: http://richargh.de/talks/#beehive-architecture